What Pancakes Taught Me About Neural Networks

Lately I’ve been going deeper into these systems, thanks to the MIT course I’m currently taking on Generative AI and Digital Transformation. It’s sparked a flood of questions and reminded me how energizing it is to be back in a structured learning space. Part of what feels right is sharing that journey here, in the open. Not just for feedback or documentation, but because insights shouldn’t live in a Google Drive folder waiting for one evaluator. They should circulate, maybe even resonate. So here’s one.

In my day-to-day, I design creative systems: tools that help people think more clearly, collaborate faster, and keep some kind of shared rhythm. So when I started studying neural networks, I instinctively looked for metaphors that weren’t just technical, but human. How does a system feel when it learns?

I did the usual. Asked a bunch of LLMs, skimmed diagrams, watched Ilya Sutskever talk about meta-learning. Interesting, but abstract. Let's keep in mind, this is not my regular field to be working on, even if I'm a nerd. Then something clicked in a group chat, in the most unexpected case.

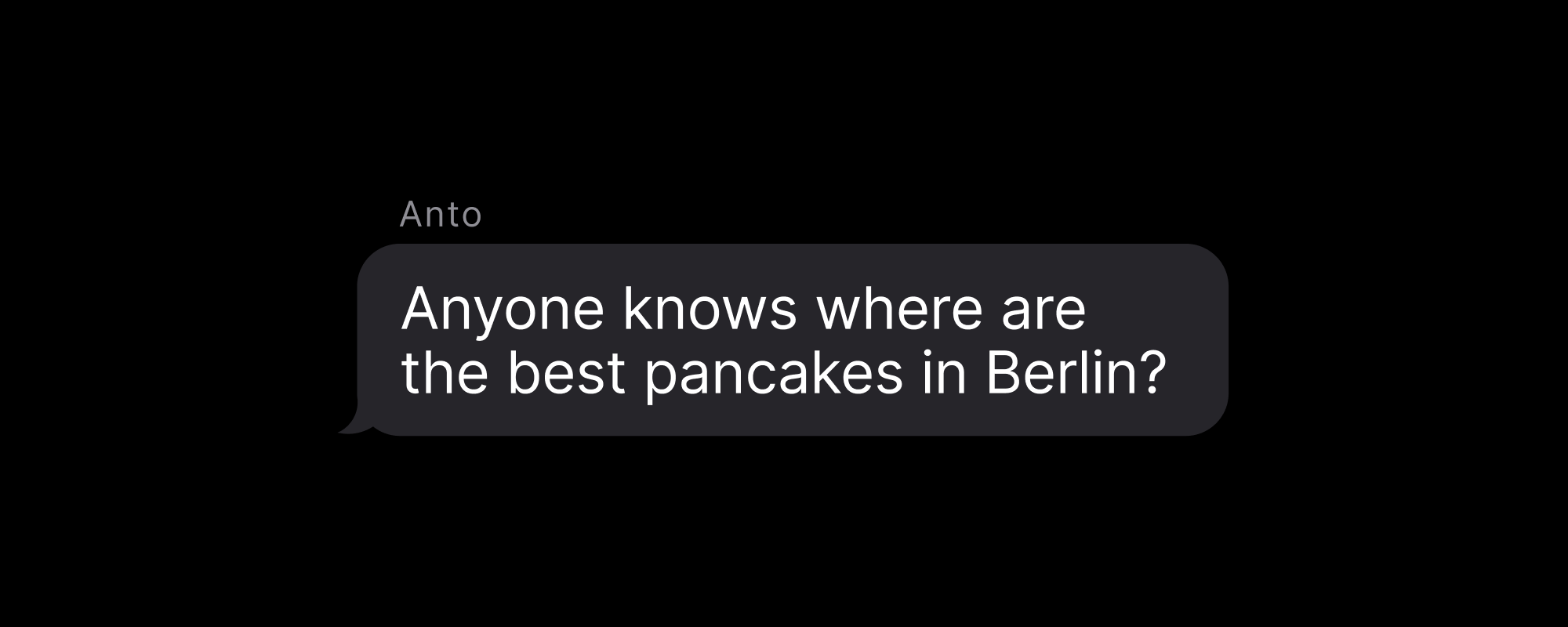

Someone asked:

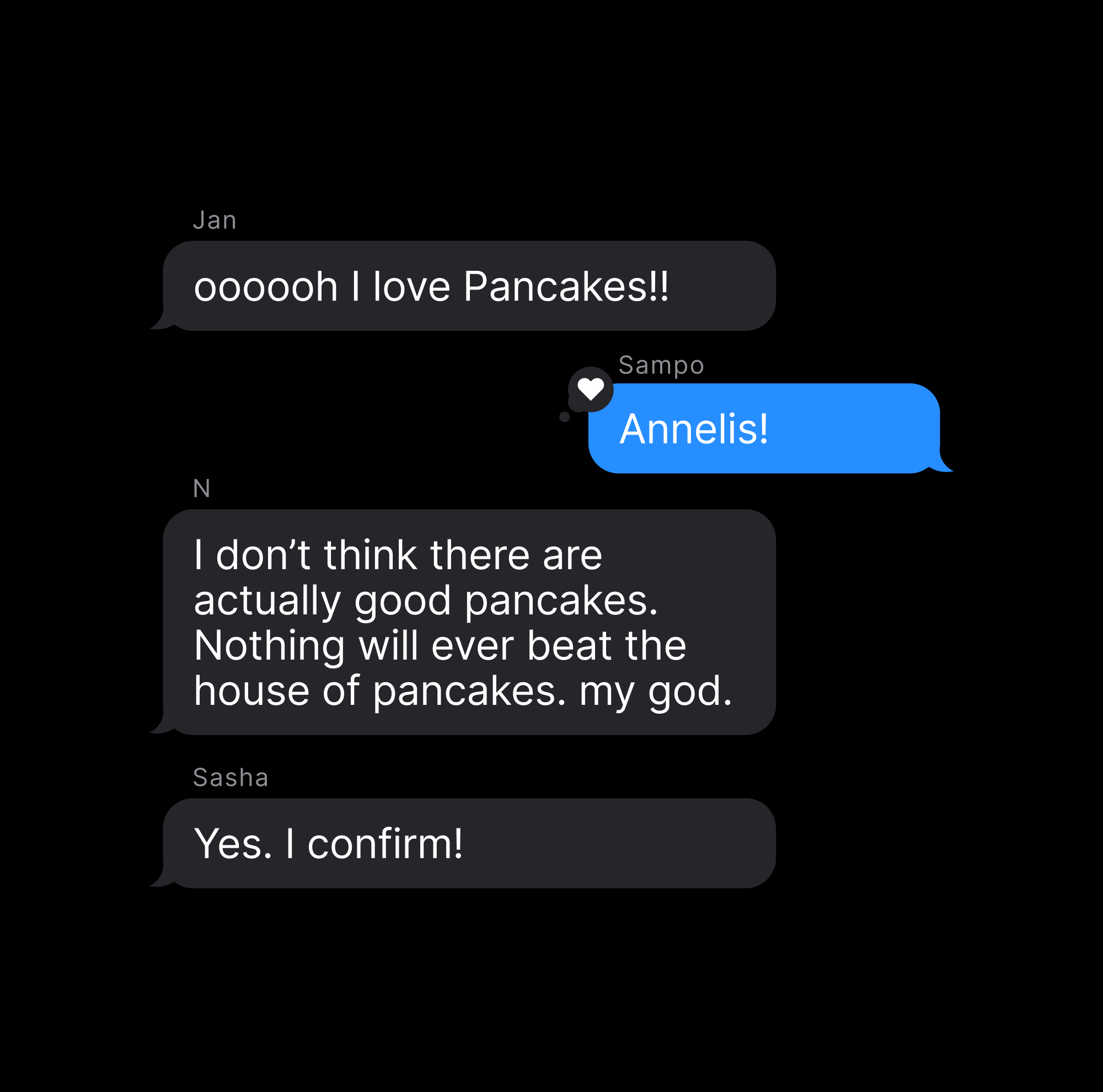

The chat lit up. Responses rolled in — some quick and confident, others hesitant. A few people just reacted with emojis.

Some repeated a name already said. Then someone added: “Honestly, Sam always have the best food recs.” That was it. Decision made.

I remember blurting out: “Guys, I think we’re a neural network!” Only the nerdy ones laughed in Emoji.

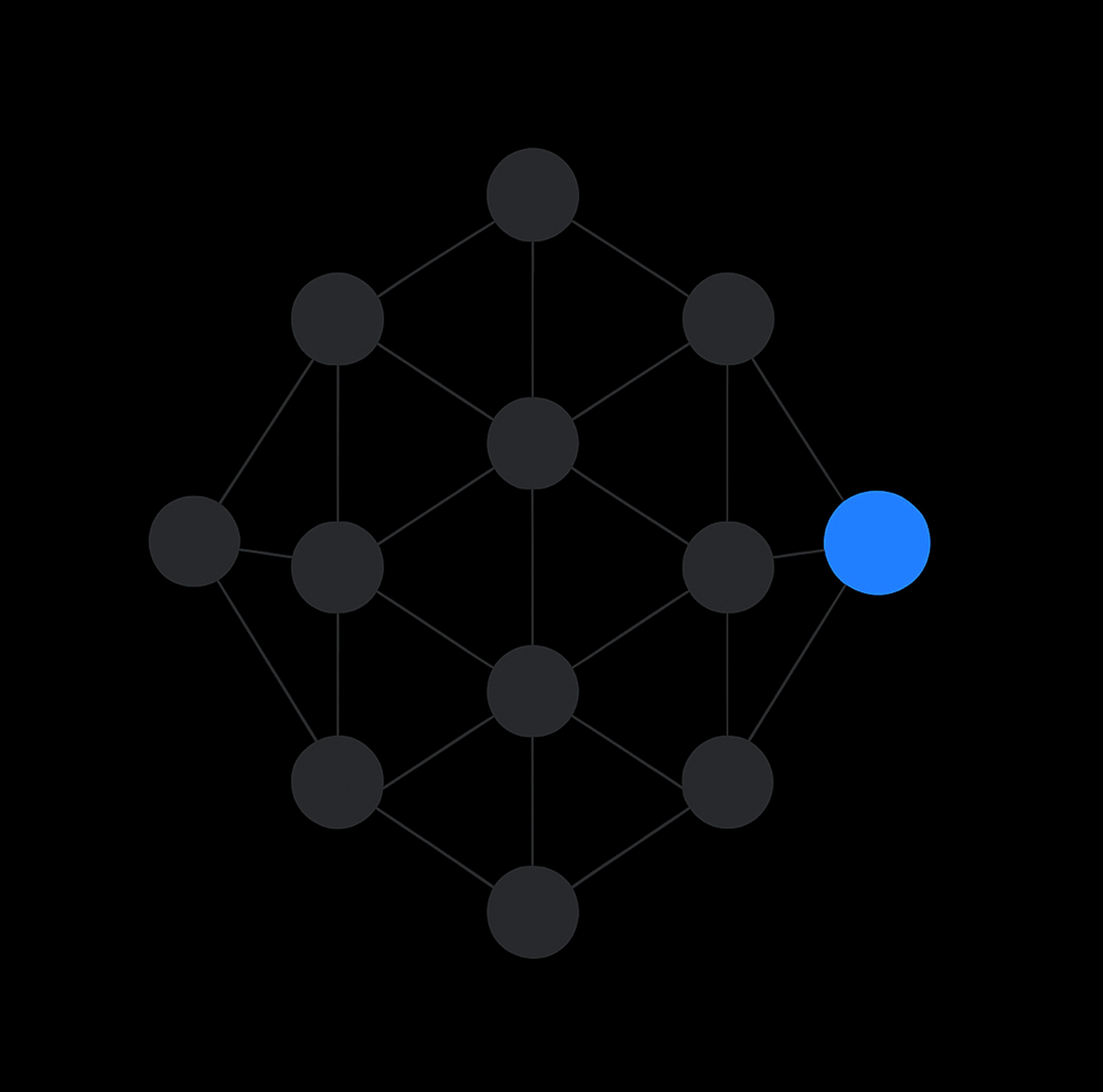

But I meant it. We’d all received the same input. Each person processed it through their own internal logic — past experiences, mood, hunger, social dynamics. Some outputs were loud, some subtle. And the final answer didn’t come from a vote, but from the path that had proven to be reliable. That’s how neural networks work. Each node does its own small part, and the system learns which signals matter.

You don’t need a perfect structure. Group chats, like neural nets, start messy. But they adapt. The more you use them, the better they route decisions. Some people become signal boosters. Others stay quiet unless it’s their domain. (Some people just add noise from time to time, heyooo!!) Over time, trust forms. Weighting happens. That’s backpropagation. Not just math butreflection, correction, iteration.

That pancake moment didn’t just teach me about neural networks. It reframed how I see any system that learns. The intelligence doesn’t sit in one part. It lives in the flow. In how signals are passed, shaped, reinforced, or forgotten.

Also, for anyone still curious: it’s Annelis.